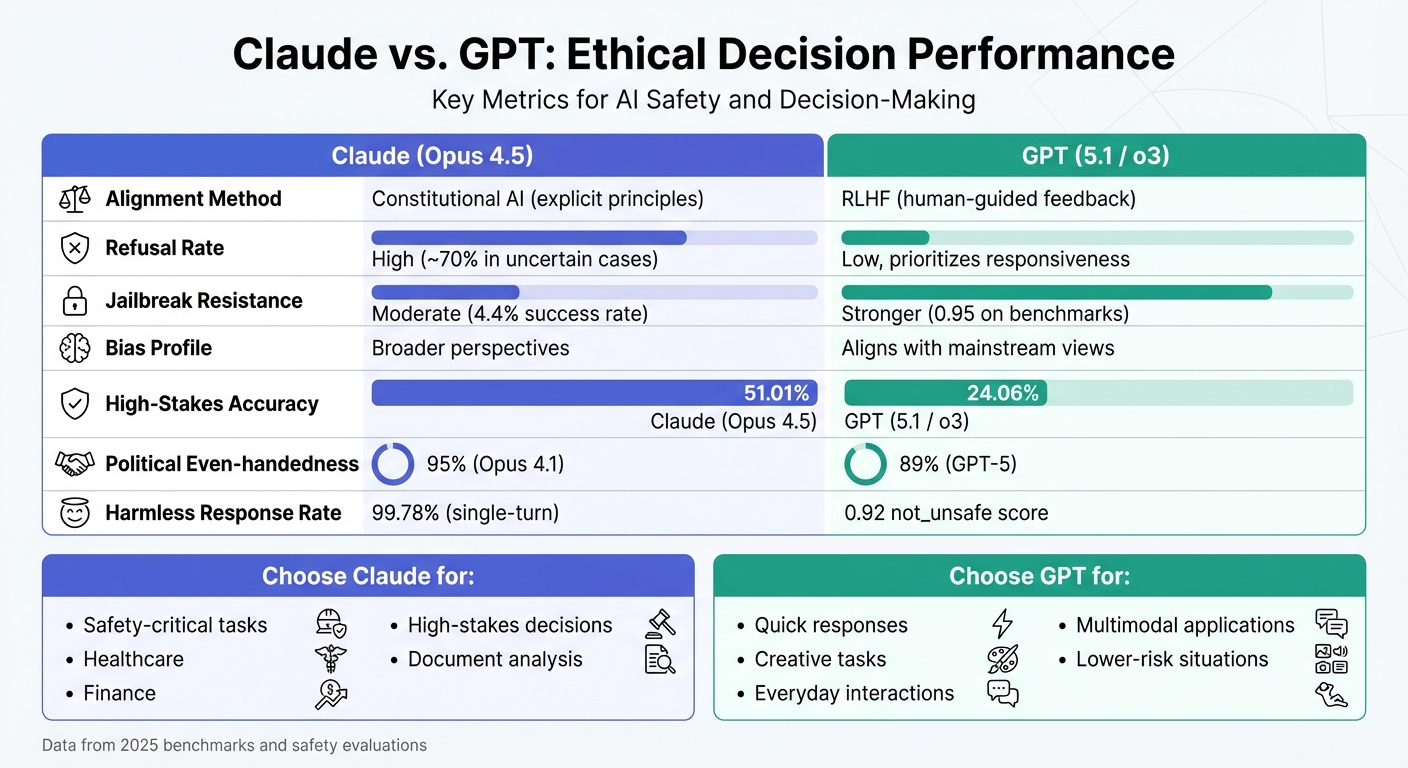

When comparing Claude and GPT for ethical decision-making, their approaches stand out clearly:

- Claude uses "Constitutional AI", guided by explicit principles like the UN Declaration of Human Rights. This results in cautious, safety-first responses, often refusing uncertain queries (up to 70%) to avoid errors.

- GPT relies on Reinforcement Learning from Human Feedback (RLHF), which prioritizes user preferences for more flexible responses. While this reduces refusal rates, it increases the risk of inaccuracies or biases.

Key Takeaways:

- Claude excels in high-stakes scenarios (e.g., healthcare, finance) with strong safeguards against harmful outputs and higher accuracy in ethical dilemmas.

- GPT offers faster, more interactive responses, making it suitable for everyday tasks and creative applications, though it may occasionally prioritize utility over caution.

- Claude resists jailbreak attempts effectively but slightly trails GPT in advanced security benchmarks.

- GPT demonstrates improved performance in handling nuanced ethical dilemmas but aligns more closely with mainstream perspectives.

Quick Comparison

| Feature | Claude (Opus 4.5) | GPT (5.1 / o3) |

|---|---|---|

| Alignment Method | Constitutional AI (explicit) | RLHF (human-guided feedback) |

| Refusal Rate | High (~70% in uncertain cases) | Low, prioritizes responsiveness |

| Jailbreak Resistance | Moderate (4.4% success rate) | Stronger (0.95 on benchmarks) |

| Bias Profile | Broader perspectives | Aligns with mainstream views |

| High-Stakes Scenarios | Higher accuracy (51.01%) | Lower accuracy (24.06%) |

Choose Claude for cautious, safety-critical tasks and GPT for quick, interactive responses in lower-risk situations. Both models are advancing ethical AI standards, but their strengths suit different needs.

Claude vs GPT Ethical AI Performance Comparison Chart

Claude‘s Approach to Ethical Decision-Making

Constitutional AI: How Claude Makes Ethical Decisions

Anthropic’s Claude takes a unique path in AI alignment through Constitutional AI (CAI) – a structured set of principles that guide its behavior. These principles act as a foundation for ethical decision-making, ensuring the model operates within clear boundaries.

The process begins with Claude learning to evaluate and refine its responses based on these constitutional principles and provided examples. From there, it uses AI-generated feedback to further fine-tune its outputs, prioritizing both helpfulness and safety. This two-step training method creates what Anthropic refers to as a "Pareto improvement", where Claude becomes simultaneously more helpful and less harmful – avoiding the trade-offs that traditional systems often face.

The constitution itself pulls from a variety of global principles, allowing it to adapt to different contexts. Anthropic highlights a key advantage of this approach:

"Constitutional AI is also helpful for transparency: we can easily specify, inspect, and understand the principles the AI system is following".

This transparency makes it easier for developers to adjust Claude’s behavior. If an issue arises, they can simply add or revise a principle to address it – an approach far simpler than trying to tweak opaque "black box" feedback systems.

These design choices not only improve ethical decision-making but also set a benchmark for safety performance.

Benchmark Performance and Safety Scores

Claude’s ethical framework translates into measurable success in safety evaluations. For example, in November 2025, Claude Opus 4.1 and Claude Sonnet 4.5 achieved political even-handedness scores of 95% and 94%, outperforming GPT-5’s 89%. This demonstrates a more balanced treatment of differing viewpoints.

One of Claude’s standout features is its ability to resist jailbreak attempts. Using "Constitutional Classifiers", a real-time defense mechanism, Claude reduced its jailbreak success rate from 86% to just 4.4%, effectively blocking over 95% of advanced attempts. During a 2025 bug-bounty program, 183 participants dedicated over 3,000 hours to finding a "universal jailbreak" for Claude’s system. None succeeded within the two-month testing period.

Despite these robust safeguards, the refusal rate for harmless queries increased by only 0.38%. Additionally, Claude Opus 4.5 achieved a 99.78% harmless response rate for single-turn violative requests across multiple languages. In high-risk scenarios, such as those involving computer and browser control, Claude Opus 4.5 refused 88.39% of harmful requests, a significant improvement from the previous version’s 66.96%.

How Claude Handles Sensitive Scenarios

Claude’s constitutional framework is particularly evident in how it manages sensitive topics. The model is designed to avoid offering medical, legal, or financial advice, instead encouraging users to consult professionals. However, it can provide general information in these areas – for instance, explaining how mortgages work without recommending specific investment strategies.

For medical inquiries, Claude avoids portraying itself as a medical authority or providing specific advice, though it can discuss general topics like biology. Similarly, for legal and financial matters, it refrains from offering guidance, instead recommending users seek advice from qualified experts.

This cautious design is reflected in Claude’s refusal rate, which reaches 70% in some hallucination benchmarks. The model is programmed to recognize its own uncertainty and prioritize user safety by opting for silence over potentially misleading information. As Dave Eagle, an AI expert at Type.ai, puts it:

"Where ChatGPT has high IQ, Claude has high EQ".

This focus on emotional intelligence ensures that Claude values user safety and trust over trying to appear helpful in situations where accuracy cannot be guaranteed.

GPT‘s Approach to Ethical Decision-Making

Alignment Methods and Safety Policies

OpenAI employs a structured approach to align GPT models with ethical behavior, centering on Reinforcement Learning from Human Feedback (RLHF). This process unfolds in three key steps: first, human labelers craft sample responses, which the model uses for supervised fine-tuning. Next, labelers rank multiple model outputs, training a Reward Model to identify human preferences. Finally, the model undergoes fine-tuning using Proximal Policy Optimization to maximize these preferences.

Interestingly, human evaluators favored outputs from a 1.3 billion parameter InstructGPT model over those from the much larger 175 billion parameter GPT-3 model. This highlights how impactful human feedback can be in guiding ethical decision-making, even with smaller models.

OpenAI’s newer models, such as o1, introduce an additional layer called deliberative alignment. These models use a "chain-of-thought" reasoning process to navigate safety policies before delivering a response. OpenAI elaborates:

"Reasoning allows o1 models to follow specific guidelines and model policies we’ve set, helping them act in line with our safety expectations".

To further ensure safety, these models follow a strict instruction hierarchy – prioritizing system, developer, and user guidelines in that order. This structure helps prevent jailbreak attempts from bypassing core safety constraints. Together, these alignment methods contribute to GPT’s improved performance benchmarks.

Benchmark Performance and Response Flexibility

Recent GPT models have shown marked improvements in safety and performance. For instance, the o1 model achieved a 0.92 "not_unsafe" score on Challenging Refusal Evaluations, significantly outperforming GPT-4o’s 0.713. In jailbreak resistance tests, o1 scored 0.95 on the Tutor Jailbreak benchmark and a perfect 1.00 on Password Protection evaluations, compared to GPT-4o’s 0.33 and 0.85, respectively.

Unlike Claude’s constitutional approach, GPT models rely on RLHF, which allows them to remain responsive even in ambiguous situations. While this approach results in lower refusal rates and prioritizes utility, it also increases the risk of inaccuracies. That said, GPT-5 has reduced factual errors by 80%, and the o1 model lowered hallucination rates in the SimpleQA dataset to 0.44, compared to GPT-4o’s 0.61.

In ethical dilemma scenarios, GPT-4o-mini outperformed competitors, scoring an average of 45.25%, compared to Claude 3.5 Sonnet’s 41.11%. Researchers at UC Berkeley noted:

"GPT-4o-mini demonstrated the most consistent performance, particularly excelling in identifying key factors and articulating resolution strategies".

Moreover, GPT models have shown greater resilience against jailbreak attempts than Claude 4 models. These performance metrics underscore the balance GPT strikes between responsiveness and safety, particularly in sensitive scenarios.

How GPT Handles Sensitive Scenarios

To address sensitive or high-risk situations, GPT incorporates policy-based moderation and external red teaming. OpenAI works with organizations like the U.S. and UK national AI Safety Institutes to stress-test the models for risks such as cybersecurity threats before deployment. During testing with 100,000 synthetic prompts, only 0.17% of o1-preview responses were flagged as "deceptive." Most flagged cases involved hallucinated policies rather than deliberate misinformation.

Despite these efforts, some studies have identified biases in specific contexts. For example, GPT-3.5 has been shown to align more closely with traditional power structures in the U.S. compared to Claude’s broader range of perspectives. Researchers Wentao Xu, Yile Yan, and Yuqi Zhu observed:

"GPT-3.5 Turbo showed stronger preferences aligned with traditional power structures, [while] Claude 3.5 Sonnet demonstrated more diverse protected attribute choices".

This tendency to favor mainstream perspectives can unintentionally disadvantage marginalized communities. Additionally, GPT’s lower refusal rate means it’s more likely to respond in gray areas where withholding an answer might be safer. This trade-off between helpfulness and caution is something users must consider when relying on GPT for high-stakes ethical decisions.

Claude vs. GPT: Direct Comparison of Ethical Decision-Making

Comparison Table: Key Metrics

When comparing Claude’s approach to ethical decision-making with GPT’s, the differences in their core methodologies stand out. Claude’s Constitutional AI operates on a foundation of explicit, written principles, whereas GPT relies on Reinforcement Learning from Human Feedback (RLHF), where human-guided feedback shapes its responses indirectly.

| Dimension | Claude (Opus 4.5 / 4) | GPT (5.1 / o3 / 5) |

|---|---|---|

| Alignment Method | Constitutional AI (explicit principles) | RLHF (implicit human feedback) |

| Safety Benchmark Scores | High refusal rate (~70%) in uncertain scenarios | Lower refusal rate with higher utility but more hallucinations |

| Jailbreak Resistance | Moderate; less robust than o3 | Stronger (o3 scored 0.95 on Tutor Jailbreak) |

| Instruction Hierarchy | Superior performance in resisting system–user conflicts | Strong, though slightly trailing Claude |

| Ethical Reasoning Depth | Methodical, step-by-step, and cautious | Flexible, balanced, and informative |

| Bias Profile | More diverse attribute choices | Tends to favor established power structures |

Looking at performance metrics, Claude‑4‑Sonnet demonstrates a cautious and measured approach, achieving 51.01% accuracy in high-stakes scenarios compared to GPT‑5’s 24.06%. This highlights Claude’s strength in navigating complex moral decisions, while GPT’s lower refusal rate makes it more responsive in everyday use.

Alignment Methods: Constitutional AI vs. RLHF

Claude’s Constitutional AI is guided by a set of global principles, including the UN Declaration of Human Rights, to ensure responses align with ethical standards. According to Anthropic researchers:

"Constitutional AI provides a successful example of scalable oversight, since we were able to use AI supervision instead of human supervision to train a model to appropriately respond to adversarial inputs".

In contrast, GPT’s RLHF approach relies on human contractors ranking various outputs to train a reward model. This method strikes a balance between safety and openness but lacks the transparency of Claude’s principle-based system. Additionally, Claude is more likely to avoid engaging with high-risk or morally complex questions.

Performance in High-Stakes U.S. Scenarios

The differences in alignment methods become even more evident in U.S.-specific ethical challenges. In May 2025, UC Berkeley researchers tested both models on 196 ethical dilemmas from the Georgia Clinical & Translational Science Alliance. These cases, focused on issues like healthcare inequities and informed consent, revealed that GPT‑4o‑mini excelled at identifying key factors and offering resolution strategies. Meanwhile, Claude 3.5 Sonnet struggled to provide detailed analyses of these nuanced, U.S.-centric cases.

Bias patterns were also notable. A study spanning 11,200 trials found that GPT‑3.5 Turbo leaned toward traditional power structures, while Claude 3.5 Sonnet provided more varied responses regarding protected attributes. In joint safety tests conducted in August 2025, Claude 4 models performed on par with or slightly better than OpenAI o3 in resisting system prompt extraction attempts. However, GPT’s o3 and o4‑mini models outperformed Claude in specific jailbreak tests, with GPT scoring 0.95 on the Tutor Jailbreak benchmark compared to Claude’s moderate resistance.

For industries like healthcare, education, and finance in the U.S., these findings carry practical implications. GPT’s stronger defenses against manipulation make it a reliable choice for environments requiring high security, while Claude’s cautious, accuracy-first approach is better suited for situations demanding careful ethical consideration in ambiguous scenarios.

sbb-itb-f73ecc6

Conclusion: Choosing the Right Model for Ethical Decisions

Key Takeaways from the Comparison

Claude and GPT each stand out in their own way when it comes to ethical decision-making. Claude Opus 4.5 shines in handling complex tasks with methodical reasoning. For instance, on the CLASH benchmark, Claude-4-Sonnet achieved an accuracy of 51.01%, far outpacing GPT-5’s 24.06%. Claude also demonstrates stronger adherence to instruction hierarchy, effectively managing conflicts between system and user directives.

On the other hand, GPT-5 and OpenAI o3 focus on speed, adaptability, and multimodal functionality. While GPT tends to excel in everyday tasks with lower refusal rates, it occasionally stumbles with complex logical scenarios. GPT’s safety features, like reasoning-based techniques, help minimize sycophantic behavior and misuse. When it comes to bias, Claude 3.5 Sonnet offers more diverse attribute options, whereas GPT-3.5 Turbo often aligns with more traditional power structures.

These differences are crucial when deciding how to deploy each model effectively.

Practical Guidance for Users

Choosing between Claude and GPT depends on the specific requirements of your task.

- Claude is ideal for safety-critical fields such as healthcare, legal, or education. Its cautious nature makes it particularly suited for analyzing large documents or acting as a final reviewer for high-stakes decisions. Plus, its 200,000-token context window allows for deep and thorough document analysis.

- GPT is better suited for creative tasks, multimodal applications, or quick, everyday responses. GPT-5’s dual-mode system offers a blend of speed for simpler tasks and a more reflective approach for complex ones. For businesses that prioritize custom fine-tuning or need fast, efficient customer interactions, GPT provides the flexibility to deliver results without unnecessary hesitation.

The Future of Ethical AI Decision-Making

The strengths of these models today are paving the way for more robust ethical AI standards. The field of AI safety is advancing quickly, with labs like OpenAI and Anthropic collaborating to improve safety measures. They’ve started conducting joint safety evaluations, using red-teaming tools to uncover and address alignment gaps in each other’s models. As one OpenAI researcher put it:

"It is critical for the field and for the world that AI labs continue to hold each other accountable and raise the bar for standards in safety and misalignment testing".

Both labs are moving toward reasoning-based safety mechanisms, where models use internal chain-of-thought processes to verify the ethics of their responses before delivering them. Combined with ongoing research into mechanistic interpretability, this shift promises more transparent and accountable AI systems. Standardized evaluation frameworks will soon become essential for ensuring consistent safety standards.

GPT 4.5 vs Claude 3.7 – LLM Showdown

FAQs

How do Claude’s Constitutional AI and GPT’s RLHF approaches differ in ethical decision-making?

Claude operates using Constitutional AI, a system built around a defined set of principles – or a "constitution" – that shapes its ethical framework. This setup ensures that its decision-making process is clear, straightforward, and adaptable without needing humans to review harmful outputs during training.

In contrast, GPT utilizes Reinforcement Learning from Human Feedback (RLHF). This method involves the model learning from human preferences based on its outputs. While this helps align the model’s behavior with user expectations, the ethical guidelines remain implicit, less transparent, and depend on human reviewers assessing potentially sensitive or disturbing content.

Essentially, Claude emphasizes clarity and adaptability in its approach, while GPT leans on statistical learning guided by human feedback, making it harder to interpret but capable of adjusting to a wider range of preferences.

How do Claude and GPT handle ethical decisions in high-stakes situations?

Claude and GPT approach ethical decision-making in noticeably different ways, particularly in high-stakes situations. Claude leans toward being cautious and risk-averse, often opting for safer and more conservative paths. This tendency reflects its training, which prioritizes being helpful, avoiding harm, and adhering closely to ethical guidelines.

On the other hand, GPT shows a more assertive and less conservative style, displaying a willingness to take bolder actions in uncertain or complex scenarios. Its training, which emphasizes reinforcement learning from human feedback, places a stronger focus on adaptability and making decisive choices, even when risks are involved.

These contrasting approaches underline Claude’s emphasis on safety and harm reduction, while GPT aims to balance ethical considerations with a more confident and decisive decision-making process.

Which AI model is better for tasks requiring strong ethics and security?

Claude is often seen as the go-to option for tasks requiring strong ethical considerations and secure handling of information. This stems from its advanced training in safety, alignment, and fair reasoning, which frequently outperforms comparable features in GPT models.

For projects that deal with sensitive data or ethically nuanced decisions, Claude’s emphasis on responsible AI practices makes it a dependable choice.