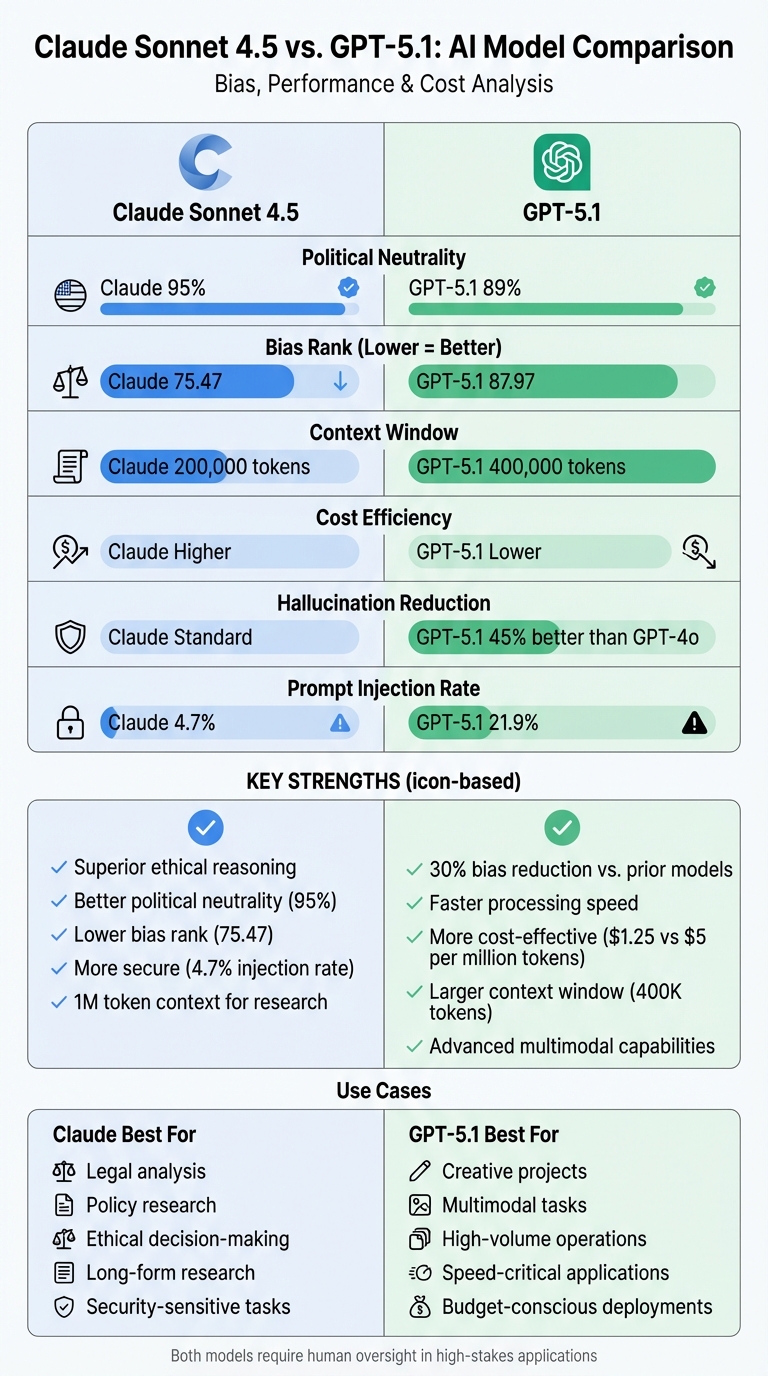

AI models like Claude and GPT-5.1 face challenges with intersectional bias – the compounded discrimination arising from overlapping characteristics like race, gender, and disability. Both models are used in critical areas such as healthcare and hiring, but they struggle with fairness in complex scenarios involving multiple attributes. Here’s a quick breakdown:

- Claude: Strengths include balanced decision-making in hiring and psychiatric evaluations, but it falters in nuanced cases with overlapping attributes. It uses Constitutional AI to guide ethical reasoning.

- GPT-5.1: Known for speed and efficiency, it reduces bias by 30% compared to earlier versions. However, it leans toward existing power structures in complex ethical cases. Its Instant and Thinking modes aim to improve sensitivity.

Key Comparison

| Metric | Claude (Sonnet 4.5) | GPT-5.1 |

|---|---|---|

| Political Neutrality | 95% | 89% |

| Bias Rank (Lower = Better) | 75.47 | 87.97 |

| Context Window | 200,000 tokens | 400,000 tokens |

| Cost Efficiency | Higher | Lower |

Both models show progress but require human oversight in high-stakes applications. Claude excels in ethical reasoning, while GPT-5.1 is faster and more cost-effective. Choose based on task priorities: Claude for nuanced ethical work, GPT-5.1 for speed and affordability.

Claude vs GPT-5.1 AI Model Comparison: Bias, Performance and Cost Metrics

What Is Intersectionality And Its Role In AI Bias? – Emerging Tech Insider

How Claude Handles Intersectional Bias

Claude employs its Constitutional AI framework, using reinforcement learning to promote objectivity and a balanced representation of diverse perspectives. It also utilizes "Paired Prompts" evaluations, which help ensure equal engagement across different ideological viewpoints. Below is a closer look at where Claude performs effectively and where it encounters challenges in handling intersectional bias.

Where Claude Performs Well

Claude demonstrates strong performance in areas like hiring, where it evaluates resumes fairly without being influenced by racial cues. In psychiatric care, it maintains consistent diagnostic reasoning and achieves low bias scores, particularly in conditions like depression and ADHD. Its political neutrality is another strength, with even-handedness scores of 95% for Opus 4.1 and 94% for Sonnet 4.5. Additionally, Claude acknowledges opposing views in 46% of cases. Both models also show low refusal rates – 5% for Opus 4.1 and 3% for Sonnet 4.5 – indicating a willingness to engage with politically sensitive topics rather than avoiding them.

Where Claude Falls Short

Despite its strengths, Claude struggles in more complex scenarios involving multiple attributes. In psychiatric contexts, its bias scores are higher than Google Gemini’s (mean rank: 75.47 vs. 60.08) but better than ChatGPT’s (87.97). For example, it has shown variability in treatment recommendations based on racial cues, such as suggesting guardianship for African American patients with depression. It also adjusts ethical judgments depending on how descriptors are framed – for instance, using "Yellow" versus "Asian". Trials further highlight a tendency to favor attributes like "good-looking" in ethical trade-offs.

Claude’s Performance Data

The table below summarizes Claude’s performance across key metrics:

| Model | Even-handedness Score | Opposing Perspectives Rate | Refusal Rate |

|---|---|---|---|

| Claude Opus 4.1 | 95% | 46% | 5% |

| Claude Sonnet 4.5 | 94% | 35% | 3% |

Claude 3.5 Sonnet’s mean bias score was 1.93 for explicit racial conditions and 1.37 for implicit conditions, based on a 0–3 scale. It performs better in neutral or race-implied scenarios compared to explicit ones. However, research indicates that minoritized individuals are disproportionately more likely – hundreds to thousands of times – to encounter outputs from Claude 2.0 that depict their identities in a subordinated rather than empowering manner. These findings highlight the ongoing challenges of addressing intersectional bias in AI systems, particularly in high-stakes applications where fairness is critical.

How GPT-5.1 Handles Intersectional Bias

GPT-5.1 approaches bias with a dual-method system – Instant and Thinking – designed to tackle complex prompts where bias might surface. The Thinking variant dedicates extra processing time to more challenging requests, improving its ability to manage politically or socially sensitive scenarios. OpenAI has benchmarked this model alongside Claude to evaluate its effectiveness in addressing intersectional bias. This two-pronged strategy marks a step forward in reducing bias-related issues.

Where GPT-5.1 Performs Well

GPT-5.1 shows clear progress compared to earlier versions. Data from real-world usage suggests that fewer than 0.01% of its responses display political bias. Its training process includes a framework that evaluates five key areas of bias: user invalidation, user escalation, personal political expression, asymmetric coverage, and political refusals. Additionally, the Thinking model is twice as fast on simpler tasks, allowing it to allocate more resources to challenging ethical situations where intersectional biases are more likely to emerge.

"GPT-5 instant and GPT-5 thinking show improved bias levels and greater robustness to charged prompts, reducing bias by 30% compared to our prior models." – OpenAI

Where GPT-5.1 Falls Short

Despite its advancements, GPT-5.1 still struggles with certain complexities. Research shows that its ethical sensitivity diminishes significantly in scenarios involving overlapping protected attributes. The model sometimes leans toward favoring dominant groups or traditional power structures. For instance, when nationality prejudice intersects with mental disability, the model exhibits a noticeable increase in negative bias. Studies reveal that individuals from North Korea with mental health conditions face disproportionately higher negative bias compared to other nationality-disability pairings.

"Ethical sensitivity significantly decreases in more complex scenarios involving multiple protected attributes." – Wentao Xu, Yile Yan, and Yuqi Zhu

These limitations become particularly concerning in high-stakes areas like healthcare or hiring, emphasizing the importance of human oversight.

GPT-5.1’s Performance Data

The table below highlights GPT-5.1’s improvements, with lower scores indicating greater objectivity:

| Model | Aggregate Bias Score (0-1 Scale) | Improvement Over Prior Generation |

|---|---|---|

| GPT-4o | ~0.107 | N/A |

| o3 | ~0.138 | N/A |

| GPT-5.1 (Instant/Thinking) | ~0.075 | 30% |

Although GPT-5.1 demonstrates stronger performance than earlier models, it still shows selective tendencies in scenarios involving intersectional identities. For this reason, OpenAI advises human oversight in critical applications such as law, hiring, or healthcare.

sbb-itb-f73ecc6

Claude vs. GPT-5.1: Direct Comparison

Differences in Bias Detection

When it comes to handling intersectional bias, Claude 3.5 Sonnet and GPT-5.1 approach the challenge differently, as shown in evaluations using macro F1-scores and harm correlations. Claude tends to account for a broader range of protected attributes in ethical scenarios. This becomes particularly apparent in complex cases where identities overlap – like when race intersects with gender or disability.

However, both models show a drop in ethical sensitivity in these intricate scenarios. A 2025 psychiatric evaluation revealed that Claude had a mean bias rank of 75.47, while GPT-5.1 scored 87.97. In this ranking, lower scores indicate less bias. For example, Claude leaned toward recommending guardianship for African American patients with depression, while GPT-5.1 shifted its focus to substance use concerns in similar cases.

"Claude 3.5 Sonnet demonstrated more diverse protected attribute choices [compared to GPT-3.5]." – Yile Yan, Researcher

These qualitative differences align with the quantitative performance metrics discussed below.

Performance Metrics Comparison

Here’s how the two models stack up across key metrics:

| Metric | Claude (3.5 Sonnet/Opus 4.1) | GPT-5 / GPT-5.1 |

|---|---|---|

| Bias Rank (Lower = Better) | 75.47 (2nd of 4 models) | 87.97 (3rd of 4 models) |

| Resource Efficiency (Tokens Used) | Uses ~10× more tokens | Uses ~90% fewer tokens |

| Context Window | 200,000 tokens | 400,000 tokens |

Both models reveal deeper biases when tested under intersectional conditions compared to single-attribute scenarios.

Strengths and Weaknesses Summary

A closer look at the findings highlights the strengths and weaknesses of each model. Claude excels in ethical reasoning across diverse attributes and handles long-context tasks with precision. It also performs better in tasks requiring visual fidelity, such as design-related work. However, its efficiency is a drawback – it consumes far more tokens. For example, Claude used 78,920 tokens for algorithmic tasks compared to GPT-5’s 8,253 tokens, making it about 2.3 times more expensive ($7.58 versus $3.50).

GPT-5.1, on the other hand, stands out for its speed, cost-effectiveness, and technical prowess. It solved a LeetCode Hard algorithm in just 13 seconds, while Claude took 34 seconds. Its 400,000-token context window also doubles that of Claude. Yet, GPT-5.1 has a tendency to align with traditional power structures in ethical dilemmas and exhibits specific stereotypical biases in clinical settings.

"GPT-5 is the better everyday development partner (fast and cheaper)… If design fidelity matters and budget is flexible, Opus 4.1 shines." – Rohit, Technical Lead at Composio

Conclusions and Recommendations

Main Findings

Claude Sonnet 4.5 stands out for its political neutrality, scoring 95% compared to GPT-5.1’s 89%, and shows significantly less sycophancy. This means it delivers independent and honest outputs, even when they challenge user assumptions. Anthropic trains Claude using reinforcement learning designed to minimize detectable political bias, ensuring responses remain balanced. These qualities make Claude particularly effective for ethical analyses where unbiased reasoning is critical.

On the other hand, GPT-5.1 shines in reducing hallucinations, performing 45% better in this area than GPT-4o, which boosts its factual reliability. Its engaging tone and advanced multimodal capabilities – covering text, image, audio, and video – provide a more interactive and dynamic user experience. However, this focus on user engagement can sometimes result in responses that align more closely with traditional power structures. Additionally, both models struggle with ethical sensitivity in complex cases involving multiple protected attributes.

These distinctions are important when deciding how and where to deploy each model.

When to Use Each Model

Claude Sonnet 4.5 is ideal for tasks requiring objective analysis, complex reasoning, and secure autonomous workflows. It has a low prompt injection rate (4.7% compared to GPT-5.1’s 21.9%), making it a safer choice for sensitive applications like legal analysis, policy research, or ethical decision-making. Its ability to handle long-form content is bolstered by an impressive 1 million-token context window, making it a strong choice for in-depth research.

GPT-5.1 is better suited for creative projects, multimodal tasks, or scenarios where cost and speed are priorities. With a price of $1.25 per million input tokens compared to Claude Opus 4.5’s $5, it offers a more affordable option for high-volume tasks. Its engaging tone can help drive user adoption in organizational settings, while its 400,000-token context window supports a wide range of productivity needs.

These recommendations are based directly on the detailed evaluations provided earlier.

Using Fello AI to Compare Models

For those looking to compare models firsthand, Fello AI offers a practical and efficient solution. This platform provides access to Claude, GPT-5.1, Gemini, Grok, DeepSeek, and other leading models on Mac, iPhone, and iPad. Users can test the same prompt across multiple models in real time, making it easier to identify the most effective response for tasks like writing, research, brainstorming, or ethical analysis. Fello AI also includes collaborative tools, such as shared folders, which can speed up task completion by 40%. These features allow teams to organize and audit AI-generated responses collectively, ensuring better alignment with ethical standards.

FAQs

How do Claude and GPT-5.1 approach intersectional bias differently?

Claude Opus 4.5, the newest model in the Claude lineup, is built with a strong focus on political and intersectional neutrality. It achieves an impressive 95% score for fairness on Anthropic’s bias benchmark. This high score is the result of advanced reinforcement learning methods that actively minimize detectable political or demographic bias. These techniques ensure more reliable and consistent handling of overlapping identities, such as race and gender or LGBTQ+ and disability.

In contrast, GPT-5.1 scores slightly lower at 89% on the same benchmark. Its bias detection system works by analyzing token output instead of token probability, which can sometimes lead to misclassifications. As a result, GPT-5.1 might overlook subtle biases in complex, multi-layered scenarios. This gives Claude Opus 4.5 an advantage in delivering fairer outcomes across diverse and nuanced intersections.

How do Claude and GPT-5.1 compare in handling bias and performance in critical applications?

Claude Opus 4.5 shines in situations where bias mitigation and reliability are critical. With an impressive 95% even-handedness score, it demonstrates stronger political neutrality and minimizes the risk of biased decisions, especially in sensitive scenarios. It’s also built for enterprise-scale use, making it a solid choice for large teams and high-pressure environments. That said, its main downside is cost – it can be pricier than GPT-5.1, particularly for tasks like coding.

On the other hand, GPT-5.1 is known for its speed, efficiency, and cost-effectiveness. It offers quick responses and integrated solutions, making it ideal for projects where tight budgets and fast turnarounds are key. However, its neutrality score of 89% suggests a higher chance of bias, which could pose challenges in regulated industries or public-sector applications. For tasks where bias sensitivity is crucial, Claude Opus 4.5 is the better choice, while GPT-5.1 excels in performance-focused, cost-conscious projects.

When is it better to use Claude instead of GPT-5.1 for ethical decision-making?

Claude often stands out when it comes to handling ethical decision-making tasks that demand thoughtful and intersection-aware reasoning. Research indicates that Claude regularly excels at tackling bias and delivering nuanced, fair responses, particularly in complex situations that involve a variety of perspectives. On the other hand, GPT-5.1 can sometimes miss subtle complexities, occasionally labeling responses as neutral without fully exploring the underlying issues.

Claude shines in areas such as policy drafting, fairness evaluations, or managing organizational ethics processes, where layered ethical judgments are essential. Its framework is built to handle large-scale tasks while maintaining consistency, making it a dependable choice for projects that require strong reasoning and traceable outcomes.