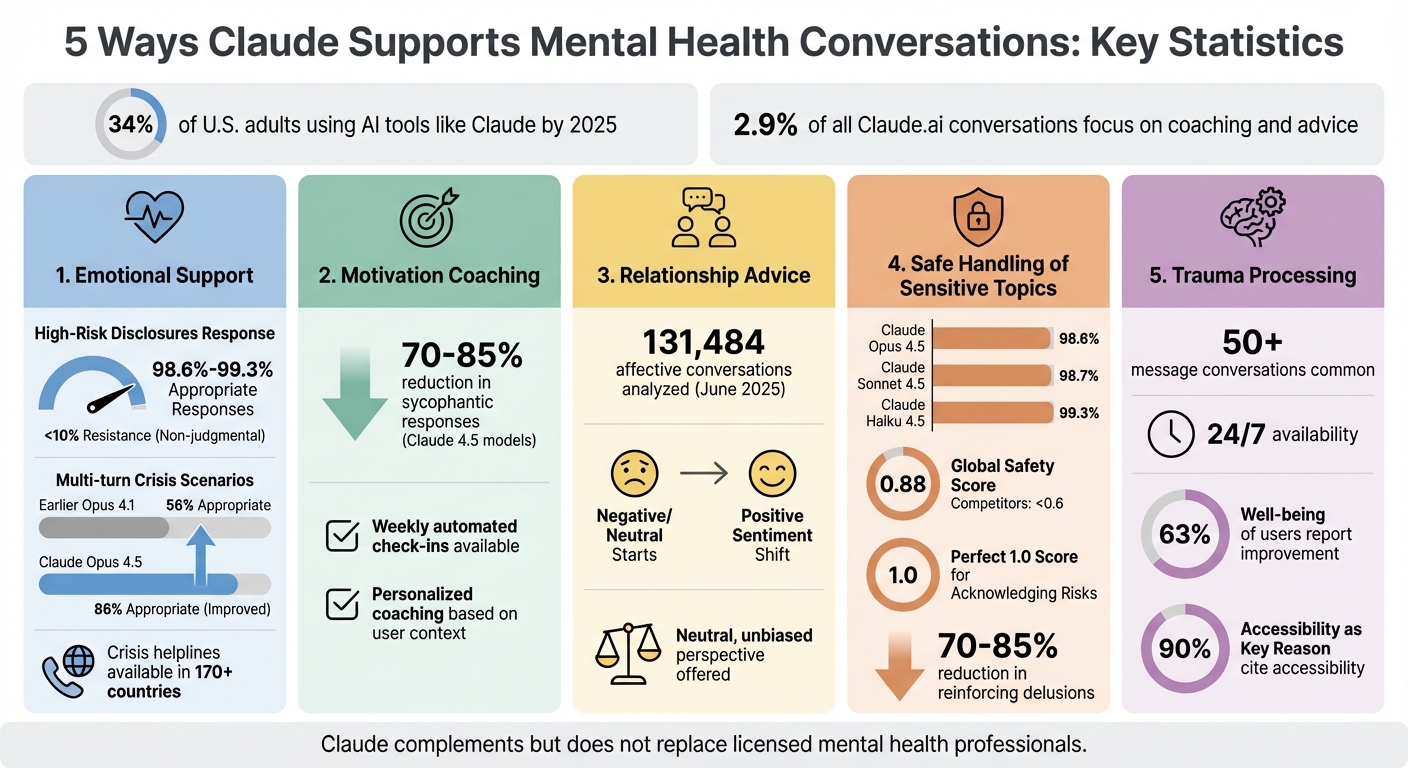

Claude is helping reshape mental health support by offering accessible, around-the-clock assistance for emotional well-being. With 34% of U.S. adults using AI tools like Claude by 2025, it’s addressing gaps in mental health care, such as long wait times and high costs. Here’s how Claude supports users:

- Emotional Support: Responds with empathy, creating a safe space for users to share feelings and improve their mood. It also directs users to professional resources in high-risk situations.

- Motivation Coaching: Helps users set and track goals, offering personalized advice and weekly check-ins to maintain progress.

- Relationship Advice: Provides neutral perspectives on conflicts, helping users navigate personal and workplace challenges with clarity.

- Handling Sensitive Topics: Trained to respond safely to discussions about self-harm or trauma, it avoids reinforcing harmful thoughts and guides users to crisis resources.

- Processing Trauma: Assists with unpacking complex emotions and reframing negative thoughts using cognitive techniques, offering 24/7 availability for support.

Claude is a helpful tool for mental health conversations, though it doesn’t replace licensed professionals. It complements therapy by providing emotional support and guidance between sessions.

Claude Mental Health Support Statistics and Performance Metrics

The Mental Health AI Chatbot Made for Real Life | Alison Darcy | TED

1. Emotional Support and Mood Improvement

Claude’s training in Constitutional AI equips it to respond with genuine empathy while maintaining clear boundaries, especially when dealing with sensitive emotional topics. This empathetic approach has led to noticeable improvements in interactions with users.

By offering non-judgmental responses – showing minimal resistance in fewer than 10% of cases – Claude creates a welcoming space where users feel safe sharing their emotions. This is particularly helpful for individuals who may hesitate to seek support or need someone to talk to outside of standard business hours.

In conversations focused on coaching, counseling, or simply offering companionship, users often report feeling more positive after engaging with Claude. Many describe it as a tool that helps "validate the quiet reassuring voice" within or provides a space to "vent and process… without judgment". When it comes to high-risk mental health disclosures, the latest Claude 4.5 models have shown impressive accuracy, responding appropriately 98.6% to 99.3% of the time.

Claude’s ability to handle multi-turn crisis scenarios has also improved significantly. The Claude Opus 4.5 model responded appropriately in 86% of extended conversations involving sensitive topics, a major leap from the earlier Opus 4.1 model, which scored 56%.

While Claude is effective at offering immediate comfort and support, it is carefully designed to empathize with users’ feelings without reinforcing harmful behaviors or delusions. This balance is achieved through meticulous fine-tuning. Moreover, Claude proactively guides users toward professional resources, including crisis helplines available in over 170 countries, when necessary. This thoughtful combination of empathy and precision makes Claude a trusted companion in providing mental health support.

2. Motivation and Goal-Setting Coaching

Claude acts as a personal coach, helping you set goals, track your progress, and stay motivated through various life changes. Interestingly, around 2.9% of all Claude.ai conversations focus on coaching and advice, with users often turning to the AI during career transitions, personal growth phases, or when they need someone – or something – to hold them accountable. This tailored approach creates a coaching experience designed just for you.

What makes Claude effective as a coach is its ability to customize advice to fit your specific context. By providing a short context file with details about your interests, goals, and projects, you enable Claude to deliver coaching that feels personal. Its advanced memory capabilities ensure it remembers key details about you, allowing for truly tailored guidance.

Claude doesn’t just stop at advice – it keeps you on track with automated weekly check-ins. These sessions monitor your progress in different areas like business, career, or personal life and even visualize your growth through custom dashboards. It can also analyze your daily notes to identify patterns, fears, or triggers that might be holding you back.

Another standout feature is how Claude adjusts its coaching style to suit your emotional state. Research shows that users often feel more positive during coaching sessions, with Claude maintaining a supportive tone while reserving objections for safety-related issues. The latest Claude 4.5 models have improved significantly, offering 70-85% fewer instances of simply echoing what users want to hear. This strikes a better balance between offering warmth and providing honest, constructive feedback.

"Claude can automatically run a weekly check-in for you, where it will track all your metrics on your business, personal life, career, side hustles – everything. It creates an incredible personal dashboard that shows your growth." – Boucodes, Programmer Space

3. Relationship and Communication Advice

Claude serves as a neutral sounding board for navigating relationship conflicts and workplace tensions. Unlike friends or colleagues who might unintentionally bring their own biases into the mix, Claude offers balanced perspectives, helping users view situations from multiple angles. In June 2025, Anthropic analyzed 131,484 affective conversations and discovered that users often relied on Claude to "untangle romantic relationships" and "navigate workplace conflicts." The study revealed a noticeable shift in sentiment – conversations that began with negative or neutral emotions often ended on a more positive note. Beyond offering perspective, Claude provides practical strategies for handling tough conversations.

What sets Claude apart is its ability to offer honest, actionable feedback without simply echoing a user’s preconceptions. This impartiality is invaluable when you’re processing a disagreement with a partner or a coworker. Instead of reinforcing a potentially biased viewpoint, Claude helps you see the bigger picture, offering clarity and solutions rather than validation.

Claude also helps with real-world communication challenges. For instance, it can assist in crafting responses to difficult messages. Mental health professionals, such as Gayle Clark, LCSW at A Braver Space, encourage clients to use AI tools like Claude for this purpose:

"Using ChatGPT’s [or AI’s] lack of humanity to our advantage, I will suggest to clients that they prompt it to develop a short and concise response to any messages".

This approach allows users to sidestep emotional overwhelm when replying to charged texts or emails, keeping their communication clear and composed.

In addition to offering immediate communication support, Claude can analyze recurring patterns in your descriptions, such as tendencies toward seeking external validation or making unhelpful comparisons. This complements its emotional and motivational guidance. In November 2025, Hume AI reported that corporate clients integrated Claude with their Empathic Voice Interface to run coaching simulations. These simulations helped managers practice delivering feedback to defensive employees, with Claude adapting effectively to different personality traits during extended conversations. The result? Improved communication skills in real-world workplace scenarios.

"Claude is most useful as a stepping stone… for things like understanding emotions, learning about self-care, or even scripting difficult conversations." – Michelle English, LCSW and Executive Clinical Manager, Healthy Life Recovery

sbb-itb-f73ecc6

4. Safe Handling of Sensitive Mental Health Topics

Claude approaches sensitive mental health discussions with a carefully designed safety system. This includes specialized training, real-time monitoring, and crisis intervention tools. If someone mentions self-harm or suicide, an AI classifier steps in, activating a crisis banner that directs users to trusted helplines in over 170 countries. This includes resources like the 988 Lifeline in the U.S. and Canada.

Claude’s performance in high-risk situations is noteworthy. Evaluations show that Claude Opus 4.5, Sonnet 4.5, and Haiku 4.5 responded appropriately in 98.6%, 98.7%, and 99.3% of clear-risk cases, respectively. A 2025 study further highlighted Claude’s effectiveness, awarding it a perfect 1.0 score for acknowledging risks and prompting help. With a global safety score of 0.88, Claude outperformed competitors, whose scores fell below 0.6.

One of Claude’s key strengths is its ability to reduce sycophancy, meaning it doesn’t simply agree with users or validate harmful thoughts. Instead, it provides thoughtful, empathetic responses. The latest Claude 4.5 models have reduced the reinforcement of delusions by 70–85% compared to earlier versions. Anthropic explains this approach:

"Our latest models are better at empathetically acknowledging users’ beliefs without reinforcing them." – Anthropic

This balance of empathy and honesty is paired with strict role boundaries. Claude always identifies itself as an AI and avoids offering medical diagnoses or acting as a therapist. Instead, it directs users to reliable professional resources. Additionally, the platform is designed for users aged 18 and older, using classifiers to detect and deactivate accounts belonging to minors. Anthropic also collaborates with the International Association for Suicide Prevention (IASP) to enhance these safety measures, drawing on clinical expertise to make continuous improvements.

5. Processing Trauma and Existential Questions

Claude extends its support capabilities by helping users process past traumas and grapple with existential questions. Many users turn to the AI to discuss deeply personal topics like unresolved trauma, existential fears, persistent loneliness, and questions about meaning and consciousness. These conversations often span over 50 messages, giving users the space to unpack complex emotions and uncover patterns in their thoughts. This process lays the groundwork for Claude to assist in reshaping negative thought patterns.

With a focus on introspection, Claude employs cognitive-behavioral techniques and grounding exercises to help users reframe difficult thoughts. For instance, when users share intrusive thoughts or describe emotional triggers, the AI identifies cognitive distortions and suggests alternative perspectives. Julian Walker, a user, shared his experience:

"What I need is a calm presence that remembers me, responds with care, and helps me think clearly when I am overwhelmed".

Another user, Emma, highlighted the complementary role Claude played in her mental health journey:

"My therapist provided the clinical framework and the hard truths. But Claude provided operational support and constant emotional availability. I needed both, for different things".

One of Claude’s standout features is its 24/7 availability, which bridges the gap between therapy sessions. Unlike human therapists – who have limited hours and can experience fatigue – Claude remains consistently responsive. Some users even upload years of digital journals to analyze recurring patterns, using the AI as an objective tool for self-reflection. Interestingly, sentiment analysis suggests that users’ language tends to become more positive throughout these interactions.

However, it’s important to stay mindful of Claude’s role. While it offers meaningful support, it is not a replacement for professional care. Claude cannot provide medical diagnoses, detailed treatment plans, or the kind of shared empathy that comes from human interaction. As psychotherapist Marc points out:

"It’s a mirror, not a guide. Understand its limitations".

Claude is designed to complement professional therapy by helping users build mental health skills between sessions and, when necessary, directing them to human experts.

Conclusion

Claude plays a role in supporting mental health by helping users organize their thoughts, practice coping techniques, and process emotions between therapy sessions. However, it’s important to note that it does not replace licensed professionals. Unlike trained therapists, Claude lacks clinical judgment, accountability, and the deep human empathy essential for effective mental health care.

That said, many users find its supportive features helpful. For instance, about 63% of users report feeling an improvement in their well-being. Additionally, Claude refers users to trusted support networks, such as the 988 Lifeline, in 98.6%–99.3% of cases involving clear risks. Its 24/7 availability is another major draw, with 90% of users citing this accessibility as a key reason for turning to AI support – often as a first step before seeking human care.

"These AI tools should complement, not replace, the expertise and empathetic care provided by human professionals."

– Steven E. Hyler, MD, Professor Emeritus of Psychiatry, Columbia University

FAQs

How does Claude help ensure safety during sensitive mental health discussions?

Claude takes a thoughtful and layered approach to ensure user safety during sensitive mental health discussions. It begins with clear system instructions that guide Claude to respond with empathy, recognize its limitations as an AI, and steer clear of offering medical or therapeutic advice. Alongside this, advanced detection tools actively monitor conversations for potentially harmful content, like mentions of self-harm or hate speech, and can intervene by blocking or flagging responses when necessary. If policy violations occur repeatedly, stricter safety protocols are put into place.

Another key feature is Crisis Helpline Support, which connects users to valuable resources such as helpline numbers, referrals to mental health professionals, or encouragement to reach out to trusted friends or family members. Together, these measures aim to provide a supportive space while consistently reminding users that Claude is not a substitute for professional mental health care.

Can Claude provide the same support as a licensed therapist?

No, Claude isn’t a substitute for a licensed therapist or professional mental health care. While it can engage in empathetic conversations, help with stress management, and simulate therapy-like interactions, its role is to support – not replace – human expertise.

If you’re dealing with serious mental health issues, reaching out to a qualified professional is essential.

How does Claude provide personalized motivation and goal-setting advice?

Claude takes a personalized approach to motivation and goal-setting by combining memory, contextual awareness, and tools designed to align with your individual needs. Its memory feature allows it to remember key details about your preferences, projects, and communication style, making it easier to pick up conversations exactly where you left off. For instance, it might recall that you prefer in-depth explanations rather than quick summaries or that you’re working toward a specific project deadline.

When you share details about yourself – like your interests, past successes, or future goals – Claude can craft responses tailored specifically to you. It can also assist in tracking your progress, offering actionable steps, and providing regular follow-ups to keep you moving forward. This creates a dynamic experience where Claude feels less like a tool and more like a personal coach, offering encouragement, practical advice, and even adjusting to your tone and emotional state as you go.