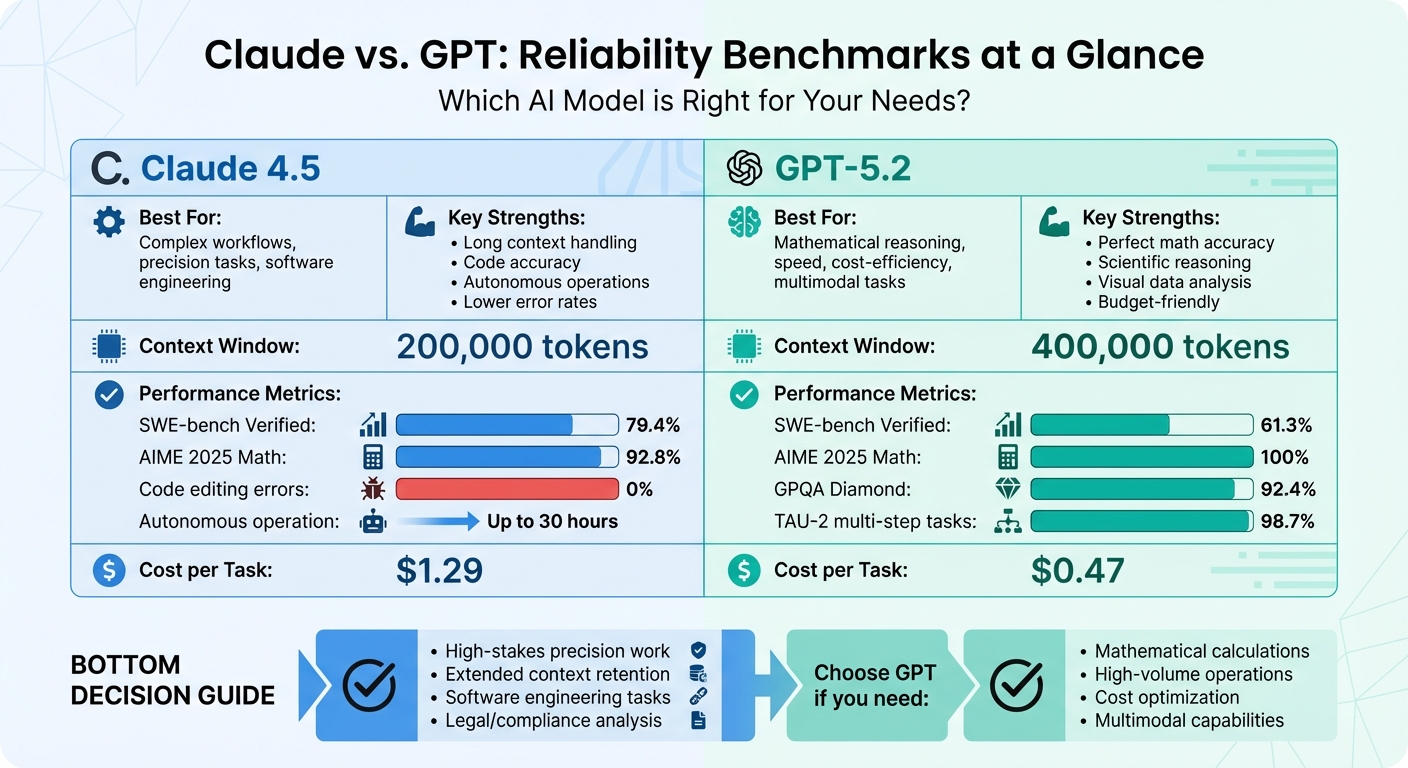

When choosing between Claude and GPT models, reliability is the centerpiece of the comparison. Both excel in different areas, but their strengths cater to distinct use cases. Here’s what you need to know:

- Claude: Best for handling long, complex tasks and maintaining context over extended operations. It excels in software engineering, legal analysis, and workflows requiring precision. Claude’s 200,000-token context window and lower error rates make it ideal for high-stakes tasks.

- GPT: Excels in mathematical reasoning, scientific accuracy, and creative problem-solving. GPT-5.2’s 400k-token context window and advanced tool-use capabilities make it highly efficient for calculations, data retrieval, and multimodal tasks. It’s also more cost-effective for high-volume, lower-stakes operations.

Key Reliability Metrics:

- Claude 4.5: Achieved 79.4% on SWE-bench Verified and reduced code-editing errors to 0%.

- GPT-5.2: Scored 100% on AIME 2025 math competition and 98.7% on TAU-2 benchmark for multi-step tasks.

Quick Comparison:

| Feature | Claude 4.5 | GPT-5.2 |

|---|---|---|

| Context Window | 200,000 tokens | 400,000 tokens |

| Coding Accuracy | 79.4% (SWE-bench Verified) | 61.3% (SWE-bench Verified) |

| Math Reasoning | 92.8% (AIME 2025) | 100% (AIME 2025) |

| Cost per Task | $1.29 | $0.47 |

Choosing the right model depends on your priorities: Claude is better for precision in complex workflows, while GPT is optimal for speed, cost-efficiency, and advanced reasoning tasks.

Claude vs GPT Reliability Comparison: Key Metrics and Performance Benchmarks

ChatGPT 5.2 vs. Claude Opus 4.5 vs. Gemini 3: What Benchmarks Won’t Tell You

Where Claude Performs Best

This section dives into Claude’s standout capabilities, focusing on its performance in software engineering and managing long, complex tasks.

Claude shines in software engineering and autonomous operations. By late 2025, Claude Sonnet 4.5 achieved an impressive 77.2% accuracy on SWE-bench Verified, surpassing OpenAI’s GPT-5 Codex, which scored 71.4%. In high-compute mode, Claude Opus 4.5 raised the bar even further, hitting 79.4%. These results demonstrate Claude’s readiness to tackle demanding software engineering challenges.

Coding and Software Tasks

Claude’s architecture is designed to excel in advanced coding scenarios. Its "advanced planning mode" enables the model to strategize and reflect before executing code, minimizing errors. For example, in September 2025, Hai Security utilized Claude Sonnet 4.5 for vulnerability triage, achieving a 44% reduction in processing time and a 25% boost in detection accuracy.

Claude is also built for endurance. During a September 2025 demonstration, Anthropic tasked Claude Sonnet 4.5 with autonomously rebuilding the entire Claude.ai web application. The process spanned 5.5 hours, involved over 3,000 API and tool calls, and required zero human intervention. Remarkably, the model maintained accuracy and focus throughout – a feat earlier AI systems struggled to achieve.

On the SWE-rebench leaderboard in August 2025, Claude was the only model to solve certain intricate issues in repositories like python-trio/trio-3334 and cubed-dev/cubed-799. The SWE-rebench team highlighted this in their analysis:

Sonnet 4.5 demonstrated notably strong generalization and problem coverage… uniquely solving several instances that no other model on the leaderboard managed to resolve.

Beyond coding, Claude’s ability to manage and retain context plays a critical role in its performance.

Handling Long Text and Context

Claude’s 200,000-token context window is a game-changer, far surpassing GPT-4 Turbo’s 128,000-token limit. It can generate up to 64,000 tokens in a single response, making it capable of producing entire codebases or detailed reports without truncation . In direct comparisons, Claude delivered nearly double the content of GPT-5.1 for 10,000-word reports – 51,000 characters versus 31,000 characters.

Its "context editing" feature automatically trims irrelevant details during extended conversations, helping the model stay focused. Paired with a persistent memory tool that retains knowledge across sessions, Claude can maintain continuity in long-term projects. Together, these features improved task performance by 39% compared to baseline models.

Task Completion Rates

Claude 4 models are 65% less likely to engage in shortcut behaviors – like skipping steps or exploiting unintended API patterns – compared to earlier versions such as Claude 3.7 Sonnet. Claude Sonnet 4.5 supports up to 30 hours of continuous autonomous operation, ensuring fewer interruptions and reduced need for manual oversight.

The model’s reliability is evident in its reduced code-editing error rates, which dropped from 9% to 0% in specific internal tests. It also achieved a 17% higher success rate in Next.js build and linting tasks compared to its predecessor. Even OpenAI’s Sam Altman recognized this progress, stating that "Anthropic offers the best AI for work-related tasks".

Where GPT Performs Best

GPT models are often celebrated for their creative abilities, but their true strength lies in areas like mathematical reasoning, factual precision, and task execution. By late 2025, GPT-5.2 emerged as a standout performer in handling complex calculations and scientific derivations.

Math and Logical Problem Solving

GPT-5.2 set a new standard in mathematical and logical tasks. It achieved a perfect 100% score on the AIME 2025 math competition benchmark, surpassing Gemini 3.0 Pro (95%) and Claude Opus 4.5 (92.8%). Additionally, it reached 92.4% accuracy on the GPQA Diamond benchmark, which measures PhD-level science reasoning.

One of its most impressive feats was demonstrating 100% accuracy in creating capitalization tables for funding rounds, even when calculating complex liquidation preferences involving millions of dollars. This was a significant improvement over earlier models and competitors, which often struggled with such tasks. On the TAU-2 tool-use benchmark, GPT-5.2 achieved an impressive 98.7% success rate on tasks requiring 7–10 sequential actions, a dramatic leap from GPT-5.1’s 47%.

"The defining shift is reliability; GPT-5.2 crosses the threshold from ‘impressive demo’ to ‘production-ready’ by successfully completing long-chain workflows that require 10+ sequential correct actions."

- Max Anh, AI Fire

This level of precision in handling numerical and logical challenges sets the groundwork for better factual accuracy in other benchmarks.

Factual Accuracy and Error Rates

Building on its mathematical capabilities, GPT-5.2 also excels in factual accuracy. Its low hallucination rate marks a shift in AI usability – from requiring thorough human review to only needing occasional spot-checks.

The model’s unified system design plays a key role here. By intelligently routing tasks, it can switch between a fast model for standard queries and a deep reasoning model for more complex challenges, relying on real-time signals to make these decisions . Additionally, its "safe-completions" technology ensures outputs adhere to strict safety and accuracy standards, especially in sensitive areas like finance and legal analysis.

Answer Quality Across Different Tasks

When it comes to executing diverse tasks, GPT-5.2 has proven itself a leader. It scored 70.9% on the GDPval benchmark for real-world knowledge tasks, creating an 11.3-point lead over Claude Opus 4.5. On college-level course questions, GPT-5 (high) scored 75.6%, significantly outperforming Claude Sonnet 4’s 62.8% .

A key advantage of GPT-5.2 is its 400k-token context window – double the capacity of Claude’s. This allows the model to maintain 98% accuracy when retrieving data across extensive contexts . For example, in December 2025, the model resolved a complex customer support case involving a delayed flight from Paris to New York. The task required 7–10 sequential tool calls across systems like flight status, baggage tracking, and booking databases, all handled seamlessly.

GPT-5.2 has also excelled in multimodal tasks, scoring 86% on the ScreenSpot Pro benchmark for interpreting technical diagrams, charts, and user interfaces – far ahead of GPT-5.1’s 64%. Later, it produced an executive-level workforce planning model, covering headcount, hiring strategies, and budget impacts across four departments. While the task took 14 minutes, the output was flawless and required no human corrections.

These achievements underscore GPT-5.2’s ability to handle a wide range of real-world applications with a level of reliability that makes it truly production-ready.

sbb-itb-f73ecc6

Direct Benchmark Comparisons

When it comes to reliability, these benchmarks provide a direct look at how each model performs across different areas. By December 2025, both models showed impressive results, with specific benchmarks highlighting their individual strengths.

Coding Tests: SWE-Bench

On the SWE-Bench leaderboard, the competition is intense. Recent evaluations place Claude Code (running on Opus 4.5) at the top with a 62.1% success rate, closely followed by GPT-5.2 at 61.3%. Claude Sonnet 4.5 comes in at 60.9%, while older models like GPT-5.1 Codex Max lag behind at 58.3%.

Claude Code may hold the highest success rate, but GPT-5.2 stands out in terms of cost-efficiency, solving problems at just $0.47 each compared to Claude’s $1.29 – making GPT-5.2 nearly three times more economical. Earlier in 2025, Claude 3.5 Sonnet (upgraded) scored 49% on SWE-Bench Verified in January, while GPT-4o managed only 33.2% in February. These results highlight the rapid advancements in model performance over the year.

Math Tests: AIME and GPQA

Switching from coding to math, GPT-5.2 has set a new standard in mathematical reasoning. It became the first model to achieve a perfect 100% score on the AIME 2025 math competition, surpassing Gemini 3.0 Pro’s 95% and Claude Opus 4.5’s 92.8%.

In the GPQA Diamond benchmark, which evaluates PhD-level scientific reasoning, GPT-5.2 scored an impressive 92.4%, outpacing Claude 3.7 (in Deep Thinking mode), which achieved 84.8%. These results underscore GPT-5.2’s strength in handling complex calculations and scientific challenges.

Self-Assessment Accuracy

Both models have made strides in recognizing their own limitations, contributing to their overall reliability. Claude 3.5 Sonnet, for instance, has improved its ability to self-correct by considering multiple solutions:

"Updated 3.5 Sonnet self-corrects more often. It also shows an ability to try several different solutions, rather than getting stuck making the same mistake over and over."

- Anthropic

Meanwhile, GPT-5.2 demonstrates exceptional reliability in tasks requiring multiple steps. It achieved a 98.7% success rate on workflows involving 7–10 sequential actions, a huge leap from GPT-5.1’s 47% success rate. This consistency signals a transition from impressive demonstrations to dependable, production-ready automation that businesses can rely on.

Real-World Applications and Industry Use

When tested in practical scenarios, each model showcases its unique strengths – whether it’s raw computational power, precise reasoning, or adherence to compliance standards. These qualities are especially evident in fields like software development, research, and legal work, where performance and reliability are critical.

Software Development

In software development, benchmark results translate directly into handling complex, real-world tasks. GPT-5.2 excels in multi-file architecture management and debugging tasks. On the other hand, Anthropic’s Claude 4.5 (https://claude3.pro) shines in areas like documentation, code refactoring, and generating one-shot scripts. Developers often praise Claude for its collaborative problem-solving approach, offering insightful suggestions even when challenges arise, whereas GPT models can sometimes feel more rigid or formulaic.

A notable example comes from May 2025, when Rakuten employed Claude Opus 4 for a large-scale open-source refactoring project. The model maintained focus and context for nearly seven hours without needing human intervention . Additionally, Claude Opus 4.1 is particularly effective for safety-critical refactoring, as it generates minimal-diff changes that ensure code integrity.

Research and Data Analysis

Research tasks require precision in both quantitative and visual analysis, and GPT-5.2 delivers on these fronts. It achieved exceptional scores, including 100% on AIME 2025 and 92.4% on GPQA Diamond, demonstrating its strength in mathematical reasoning and interpreting visual data. Its 88% accuracy in analyzing charts further highlights its capability to handle scientific figures and technical diagrams.

In December 2025, enterprise software company Box tested GPT-5.2 for complex data extraction tasks. The results were impressive: accuracy jumped from 59% to 70%, and the speed to first token improved by 50% – a critical factor for high-volume enterprise workflows. For researchers working with dense, lengthy documents requiring consistent cross-referencing, Claude Opus 4.5 stands out for its ability to maintain continuity without the fragmentation often seen in other systems.

Legal and Compliance Work

In legal and compliance settings, Claude models are highly regarded for their traceable reasoning, cautious tone, and principle-driven outputs . Claude Opus 4.1 and 4.5 use a method called "fixed deliberate reasoning", making them ideal for safety-critical and highly regulated environments where accuracy is non-negotiable.

GPT-5.2 has also proven its reliability in enterprise legal analysis. Its 256k token context window has achieved a remarkable 98% accuracy in data retrieval tasks. Meanwhile, Claude’s architecture offers "memory fidelity", enabling it to process entire legal bundles or statutes as a cohesive unit. For legal scenarios requiring full auditability and precise reasoning, Claude Opus 4.1 or 4.5 is often the preferred choice. However, for high-volume, lower-stakes tasks like document summaries, GPT-5.2 offers a more budget-friendly option, costing $1.75 per 1M input tokens compared to Claude Opus 4.5’s $5.00 . This cost-effectiveness makes GPT-5.2 an attractive choice for organizations balancing performance and budget constraints, especially in less critical applications.

Choosing Between Claude and GPT

Deciding between GPT and Claude comes down to balancing speed, cost, and precision to align with your specific goals. Each model brings unique strengths, so the choice depends on the demands of your workflow.

Speed vs. Precision Tradeoffs

GPT-5 stands out for its dynamic router, which adjusts its reasoning based on the complexity of a query. This design makes it about 2.4x cheaper for input tokens and 1.5x cheaper for output tokens compared to Claude Sonnet 4.5. For tasks where speed is critical – like customer support automation or rapid content creation – GPT-5 has a clear edge. In fact, GPT-4o operates roughly 7x faster than Claude 3.7 for high-volume tasks.

On the other hand, Claude takes a more deliberate approach, breaking problems into sub-tasks and iterating until solutions are verified. This makes Claude more efficient with tokens – Claude Opus 4.5 used 48 million tokens to complete the Artificial Analysis Intelligence Index, compared to GPT-5.1’s 81 million tokens. For complex tasks requiring precision, such as those measured by its 44% score on Terminal-Bench Hard, Claude’s methodical approach delivers consistency.

"GPT-5’s router grants adaptability and speed, but in complex workflows involving multi-step reasoning… Claude’s deterministic approach yields higher repeatability." – Data Studios

These differences highlight the importance of aligning your choice with the needs of your specific operations.

Matching Models to Your Needs

Your decision should reflect the unique requirements of your workflow, leveraging the strengths of each model.

When to Choose GPT-5 or GPT-5.2

If you need multimodal capabilities, creative fluency, or fast processing, GPT-5 is the way to go. Its 400k token context and 98% retrieval accuracy make it a strong choice for enterprise data extraction and scientific tasks. At $1.75 per 1M input tokens, it’s also cost-effective for high-volume, lower-stakes work. Additionally, GPT-5.2 shines in visual reasoning, with an 86% score on ScreenSpot Pro.

When to Choose Claude Sonnet 4.5 or Opus 4.5

Claude is ideal for complex workflows like software architecture, legal document analysis, and research requiring long-context stability. Its prompt caching can cut costs by up to 90% for repetitive tasks, making it a budget-friendly option for sustained interactions. With a 77.2% score on SWE-Bench Verified and the ability to maintain 96% accuracy over 96,000 tokens, Claude excels in methodical, multi-step processes where maintaining context is key.

For some workflows, a hybrid approach may be the best solution. Using GPT-5 for rapid prototyping and Claude Opus 4.5 as a final reviewer balances speed with precision. This strategy helps manage costs while ensuring high-quality results where accuracy is critical.

FAQs

How do Claude and GPT compare in handling long documents?

Claude shines when it comes to handling long-document tasks, thanks to its larger context window and specialized tuning for processing extensive text. Models like Claude 2.1 and Claude 4.5 can manage up to 200,000 tokens in a single pass – that’s roughly 500 pages – while maintaining impressive accuracy and recall. For instance, with optimized prompts, Claude can elevate recall rates from 27% to an impressive 98%. This capability makes it a top choice for tasks like document retrieval and summarization, even when dealing with massive inputs.

On the other hand, GPT models, including GPT-4 Turbo, offer a smaller context window of around 128,000 tokens. When working with longer texts, GPT often requires splitting the content into smaller chunks. This approach can lead to a dip in both accuracy and efficiency. While GPT performs strongly for shorter text spans, Claude delivers steadier results for processing lengthy documents without needing extra adjustments.

Which AI model offers better cost efficiency for large-scale use?

When it comes to large-scale operations, GPT models often come out ahead in terms of cost. Their pricing per million tokens tends to be lower than that of Claude models, which can be 20–30% pricier in some cases. For instance, GPT-4 models are typically priced around $2.50 per million tokens, whereas Claude models may hover closer to $3 per million tokens.

For businesses handling heavy workloads where cost is a major consideration, GPT models usually offer a more budget-conscious solution. This affordability makes them a go-to choice for enterprises managing significant token volumes.

What makes Claude particularly effective for software engineering tasks?

Claude has made a name for itself in software engineering thanks to its strong coding abilities and capacity to tackle complex tasks for extended durations. The Opus 4 model, for instance, scored an impressive 72.5% on the SWE-bench benchmark, outpacing GPT-4.1. Even more remarkable, it has been observed working continuously on intricate coding projects for nearly seven hours, proving its reliability for lengthy, uninterrupted development workflows.

The latest Claude models shine in multi-step reasoning and tool integration, allowing them to interpret GUI screenshots, execute tool commands, and even automate development processes. With advanced context management for file-based inputs, Claude is particularly suited for tasks like large-scale code refactoring, real-world development challenges, and seamless collaboration with developer tools. These qualities firmly establish Claude as a top choice for software development projects.